After I implemented a dopamin based learning reinforcement learning (RL) algorithm with nest I saw some problems to use the software with machine learning (ML) algorithms. In my thesis I used the simulator software NEST. It is one of the most advanced neural simulatros and offers a lot of neuron models and has a welcoming community. Machine learning algorithms need rapid update cycles. Nest is designed to run big simulations for a long time and allows scaling to use supercomputers. I also used computing resoruces of the Leibniz Rechenzentrum, however I ran multiple jobs in parallel.

The tools people use are essential. Any good library or standard encodes design work eficciently and saves many hours. The sucess story of computing is the history of thounds of iterations improving designs. I believe that software should not expect users to be experts on it to use it. The mailing list often saw people with similar question to the problem I encountered in using it with rapid cycles. Many of the researches coming form neuroscience are not experienced developers. I believe that in the future more people will look into the intersection of ML and neuroscience. I felt compelled to act based on my knowledge. NEST is open source so I joined the fortnightly meetings and discussed my idea. I wrote a proposal, discussed it again. Then I submitted a pull request. Unfortuantely it is not a solution covering all use-cases and was at this point closed. I understand the decision from the perspective of neuroscientist but yet this is unfortuante for machine learning.

At this point I think it is reasonable to halt my advances in this area. The hurdle is too high to make NEST fit for machine learning do it on the side.

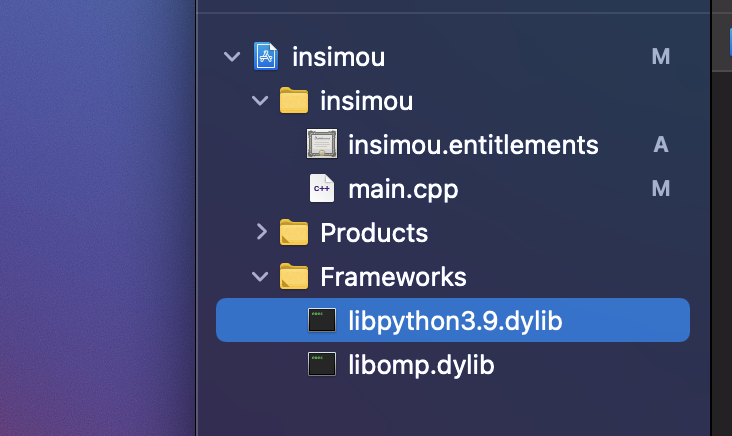

To research the application of building homegrown neuromorphic computers I started to put the SNN-RL framework into a custom C++ back-end with multiprocessing. Thus, skipping the inefficiencies of nest for this use case and enabling real-time processing. Once it has been show to work on von-Neumann computers I will showcase it on a real pole-balancing apparatus bringing the algorithm to the real world. The appparatus is almost constructed. This algorithm will be later extended to run on FPGAs allowing per-neuron multiprocessing/full neuromorphic computing. May approach will not be revolutionary but it proof that reinforcement learning with SNN can solve real world problem on custom hardware.

The new library will be open source and found here. The URL might break, as I may change the name. My wish is to work on this research in my free time. Altough my day-job includes machine learning this is yet too experimental to be applicable in industry. One potential use-case is real-time control for embedded devices. Since I now have my first experience with working prolonged 40h+ weeks I need me free time to keep my balance. On a weekend I am happy to do some other things for a while than thinking again about code and staring into screens. Updates on this topic will follow.

I found a mixture of different media the most fruitful for office-related geistarbeit.

For many people - writers are famous for this - the tools of choice are a piece of paper and a pen. I feel limited with a pen and paper in two ways: It limits to sitting in a chair and erasing is not easy. When erasing is not easy, you are limited to writing sentences and graphics of simple shape, thus limiting complexity.

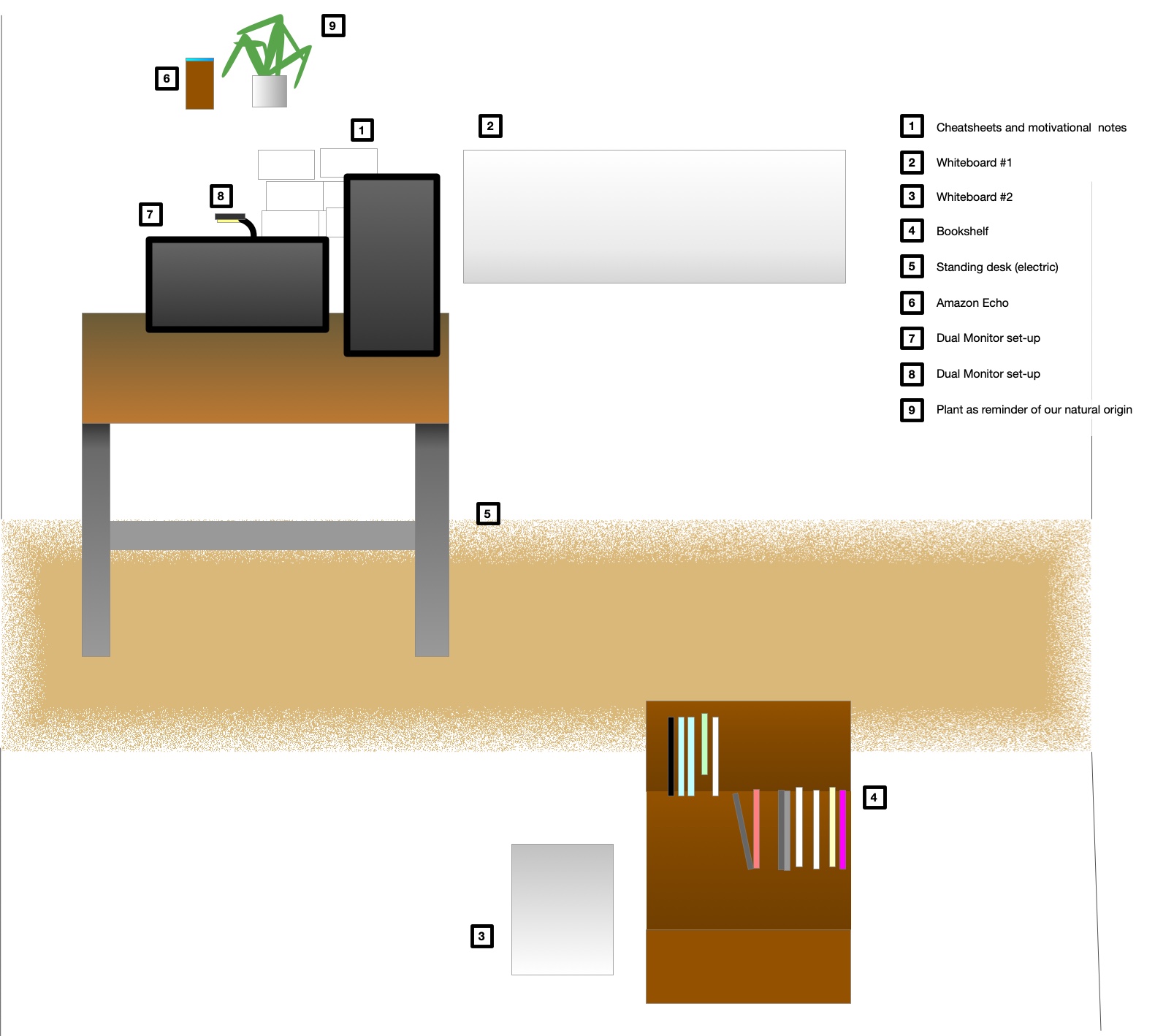

I found that the saying „out of sight, out of mind“ is true to its heart. Therefore I keep current thoughts on whiteboards on walls. I can edit them to include new insights. Once a model is complete I create a digital copy for my archives. Some of the resulting graphics can be found on this blog.

I found a mixture of different media the most fruitful for office-related geistarbeit.

For many people - writers are famous for this - the tools of choice are a piece of paper and a pen. I feel limited with a pen and paper in two ways: It limits to sitting in a chair and erasing is not easy. When erasing is not easy, you are limited to writing sentences and graphics of simple shape, thus limiting complexity.

I found that the saying „out of sight, out of mind“ is true to its heart. Therefore I keep current thoughts on whiteboards on walls. I can edit them to include new insights. Once a model is complete I create a digital copy for my archives. Some of the resulting graphics can be found on this blog. I try to use the concept of spatial arranged information. You can arrange different windows on many screens or just use a huge one. Using the two-dimensional space is useful in many situations but a lot of information is still stored hidden in files. Another issue is that working on some tasks requires a lot of space, so you take a window and arrange it in full width on your main screen. This hides all other windows.

I try to use the concept of spatial arranged information. You can arrange different windows on many screens or just use a huge one. Using the two-dimensional space is useful in many situations but a lot of information is still stored hidden in files. Another issue is that working on some tasks requires a lot of space, so you take a window and arrange it in full width on your main screen. This hides all other windows.